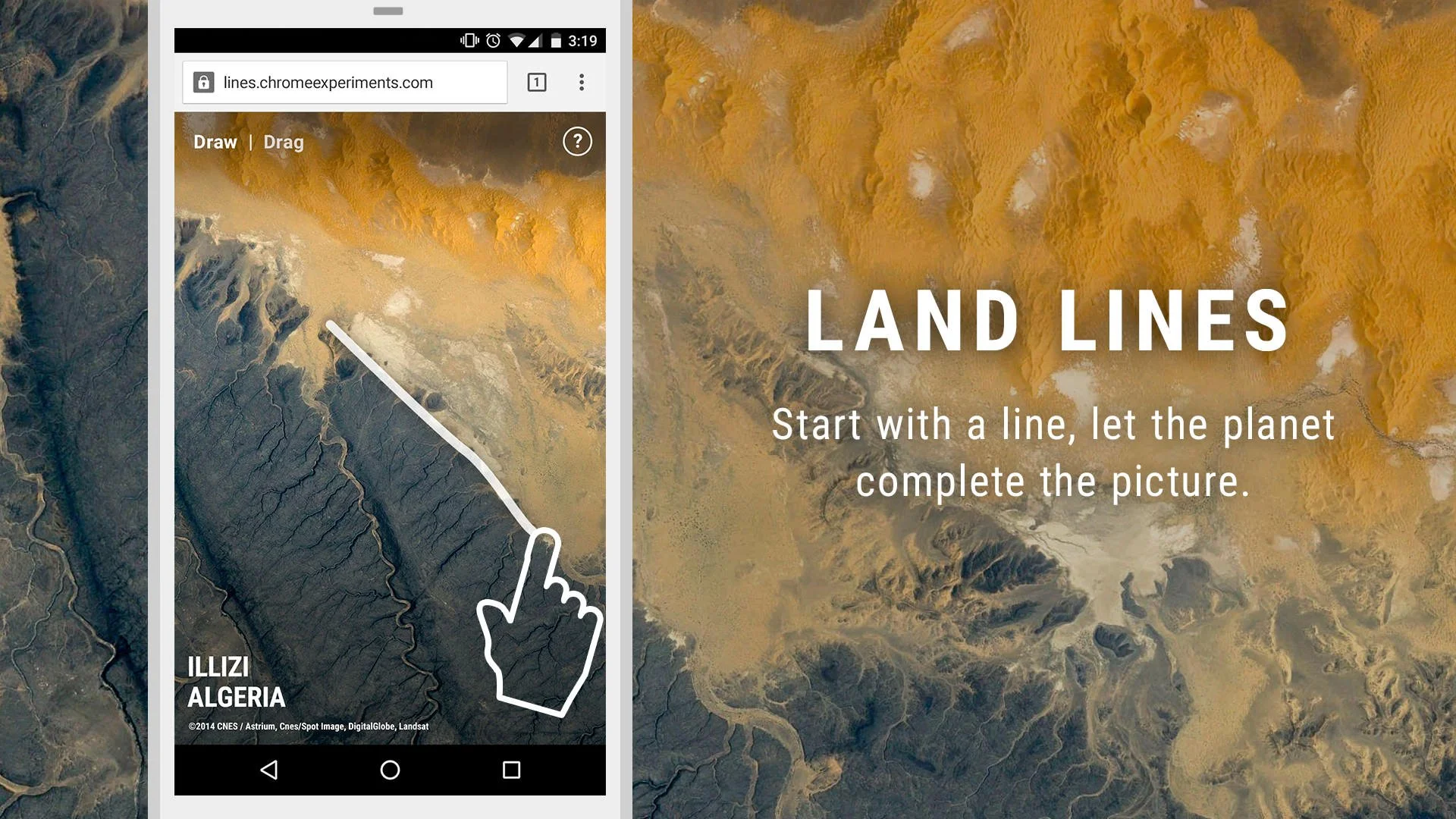

Start with a line, let the planet complete the picture.

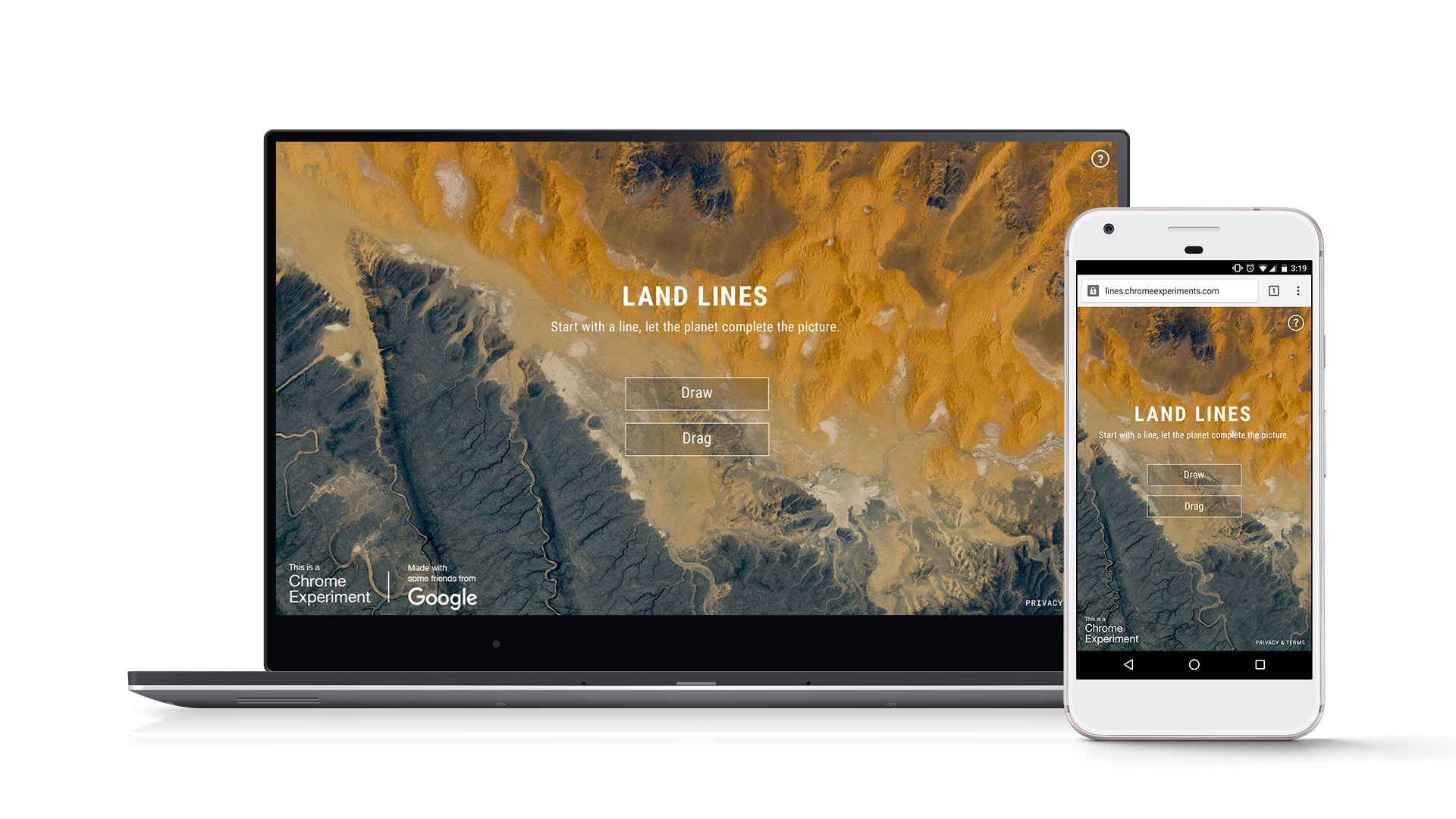

Satellite images provide a wealth of visual data from which we can visualize in interesting ways. Land Lines is an experiment that lets you explore Google Earth satellite imagery through gesture. “Draw” to find satellite images that match your every line; “Drag” to create an infinite line of connected rivers, highways and coastlines.

Using a combination of machine learning, optimized algorithms, and graphics card power, the experiment is able to run efficiently on your phone’s web browser without a need for backend servers.

Learn more about how the project was created in this technical case study or browse the open-source code on GitHub .

We used a combination of OpenCV Structured Forests and ImageJ’s Ridge Detection to analyze and identify dominant visual lines in the initial dataset of 50,000+ images. This helped cull down the original dataset to just a few thousand of the most interesting images.

For the draw application, we stored the resulting line data in a vantage point tree . This data structure made it fast and easy to find matches from the dataset in real time right in your phone or desktop web browser.

We used Pixi.js , an open source library built upon the WebGL API, to rapidly draw and redraw 2D WebGL graphics without hindering performance.

All images are hosted on Google Cloud Storage so images are served quickly to users worldwide.

Made by Zach Lieberman , Matt Felsen , and the Data Arts Team. Special thanks to Local Projects .

Download the Press Kit

- My extensions & themes

- Developer Dashboard

- Give feedback

An experiment exploring Google Earth satellite images through gesture. Doodle to begin.

Satellite images provide a wealth of visual data from which we can visualize in interesting ways. Land Lines is an experiment that lets you explore Google Earth satellite imagery through gesture. “Draw” to find satellite images that match your every line; “Drag” to create an infinite line of connected rivers, highways and coastlines. Using a combination of machine learning, optimized algorithms, and graphics card power, the experiment is able to run efficiently on your phone’s web browser without a need for backend servers.

4.2 out of 5 10 ratings Google doesn't verify reviews. Learn more about results and reviews.

- Version 0.0.0.2

- Updated January 5, 2017

- Flag concern

- Size 39.24KiB

- Languages English

- Developer Website

- Non-trader This developer has not identified itself as a trader. For consumers in the European Union, please note that consumer rights do not apply to contracts between you and this developer.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

Google Earth Blog

The amazing things about Google Earth

Land Lines Chrome experiment

December 19, 2016

Land Lines is an interesting Chrome experiment that uses Google Earth imagery. The experiment was made by Zach Lieberman, Matt Felsen, and the Data Arts Team. They have used the imagery from the Earth View Chrome extension and performed line detection on it. Learn more about the technical details here and either just try it out or see the YouTube video below to see it in action:

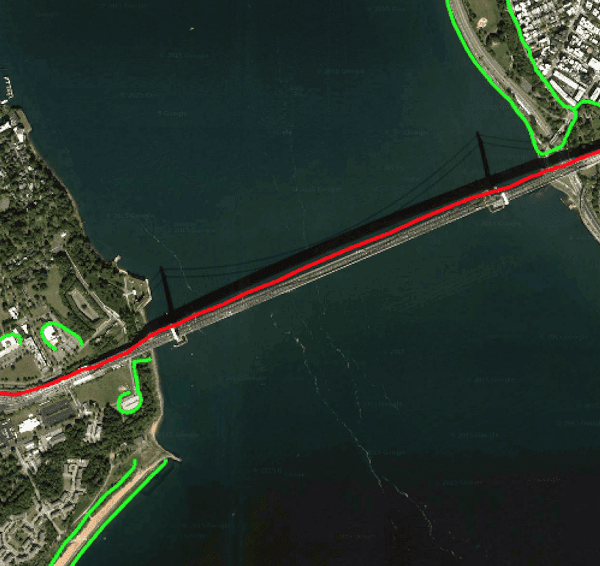

It has two modes, Draw and Drag. In the Draw mode, you draw a shape and it finds a matching image. The Drag mode makes more sense on mobile. You drag the image around and it creates a continuous line matching up images as required.

Although it is a ‘Chrome experiment’ it seems to work well in Firefox and Edge, but would not load properly in Internet Explorer 11.

If Google were to release a more modern version of the Google Earth API, imagine what would be possible!

About Timothy Whitehead

Timothy has been using Google Earth since 2004 when it was still called Keyhole before it was renamed Google Earth in 2005 and has been a huge fan ever since. He is a programmer working for Red Wing Aerobatx and lives in Cape Town, South Africa.

- More Posts(754)

Reader Interactions

April 19, 2017 at 7:11 pm

How do I turn it off?

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Land Lines is an experiment that lets you explore Google Earth satellite images through gesture. Using a combination of machine learning, data optimization, and graphics card power, the experiment is able to run efficiently on your phone's web browser without a need for backend servers. This is a look into our development process and the various approaches we tried leading us to the final result.

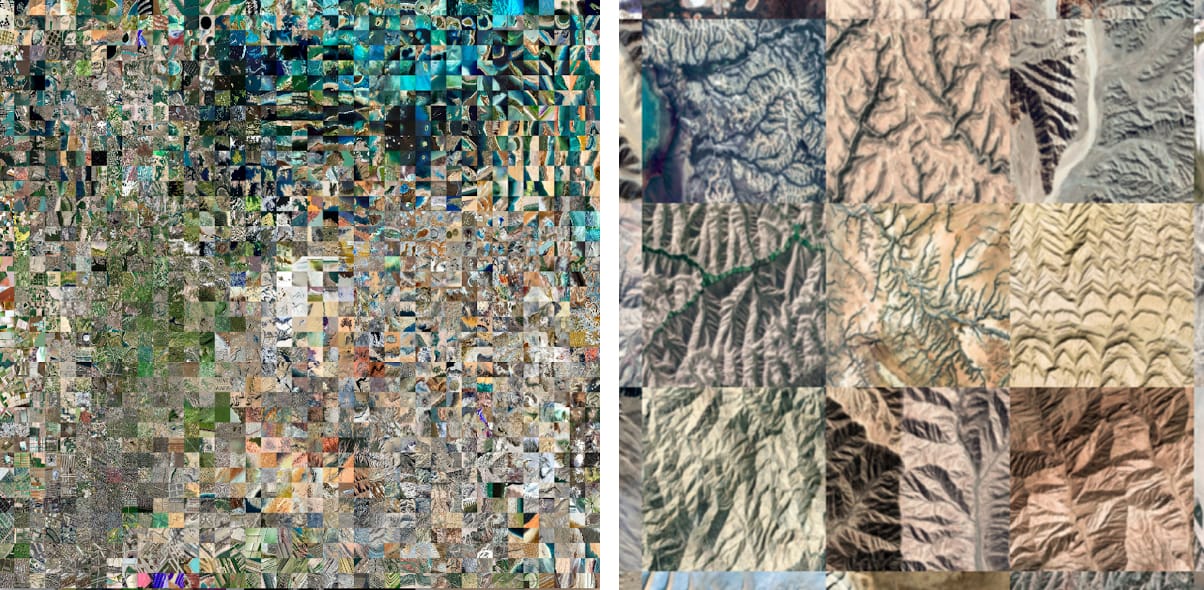

https://g.co/LandLines

When the Data Arts team approached me about exploring a data set of earth images I was quite excited - the images were beautiful, revealing all different kinds of structures and textures, both human made and natural, and I was intrigued with how to connect this data set. I did a variety of initial experiments looking at image similarity and different ways of filtering and organizing them.

As a group we kept coming back to the beautiful and dominant lines in the images. These lines were easy to spot - highways, rivers, edges of mountains and plots of land - and we designed a few projects to explore these. As an artist I was inspired by the beautiful things you can do with collections of lines - see for example Cassandra C Jones's work with lightning - and I was excited to work with this data set.

Line Detection

One of the initial challenges was how to detect lines in the images. It's easy to take out a piece of tracing paper, throw it on top of a printout of one of these photos, and draw the lines that your eye sees but in general computer vision algorithms for finding lines tend to not work well across very diverse images.

I developed a previous version of the search by drawing algorithm on a project with Local Projects and for that we hand annotated the lines to search for. It was fun to draw on top of artworks but tedious as you move from dozens of images to thousands. I wanted to try to automate the process of finding lines.

With these aerial images I tried traditional line detection algorithms like openCv's canny edge detection algorithm but found they gave either very discontinuous line segments or if the threshold were too relaxed, tons of spurious lines. Also, the thresholds to get good results were different across different image sets and I wanted an algorithm for finding a consistent set of good lines without supervision.

I experimented with a variety of line detection algorithms including recent ones like gPb (PDF) which although producing amazing results, required minutes to run per image. In the end I settled with Structured Forest edge detection , an algorithm that ships with openCV .

Once I had a good "line image", I still had the problem of actually getting the lines and identifying individual lines from each other - i.e., how do I take this raster data and make it vector. Often times when I'm looking at computer vision problems, I investigate imageJ , an open source java based image processing environment used by scientists and researchers which has a healthy ecosystem of plugins . I found a plugin called ridge detection , which helps take an intensity image and turn that into a set of line segments. (As a side note, I also found this edge detection and labeling code from Matlab useful).

I also wanted to see if it's possible to do a data visualization app that's essentially serverless, where the hard work of matching and connecting happens client side. I usually work in openFrameworks , a c++ framework for creative coding and besides the occasional node project I haven't done a lot of server side coding. I was curious if it's possible to do all of the calculation client side and to only use the server just for serving json and image data.

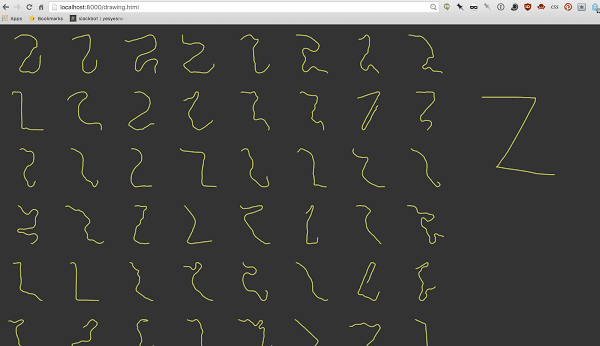

For the draw application, the matching is a very heavy operation. When you draw a line, we need to find the closest match among over tens of thousands of line segments. To calculate the distance of one drawing to another we use a metric from dollar gesture recognizer which itself involves many distance calculations. In the past, I've used threading and other tricks but in order to make it work in real time on a client device (including mobile phones) I needed something better. I looked into metric trees for finding closest/nearest neighbors and I settled on vantage point trees ( javascript implementation ). The vantage point tree basically gets built off a set of data and a distance metric and when you put in a new piece of data it gives you quite quickly a list of the closest values. The first time I saw this work on a mobile phone instantly I was floored. One of the great benefits of this particular vantage point tree implementation is that you can save out the tree after it's computed and save on the costs of computing this tree.

Another challenge of making it work without a server is getting the data loaded onto a mobile device - For draw, the tree and line segment data was over 12mb and the images are quite large, we wanted the experience to feel quick and responsive and the goal is to was try to keep the download small. Our solution was to progressively load data. In the draw app we split the vantage point tree data set into 5 pieces and when the app loads it only loads the first chunk and then every 10 seconds it loads another chunk of data in the background, so essentially the app gets better and better for the first minute of being used. In the drag app was also worked hard to cache images so that as you drag, new images are loaded in the background.

Finally, one thing I found harder than expected was making a pre-loader for both apps, so you the initial delay as data loads would be understandable. I used the progress callback on the ajax requests and on the pixi.js side, checked images that were loading asynchronously had actually loaded and use that to drive the preload message.

Connected Line

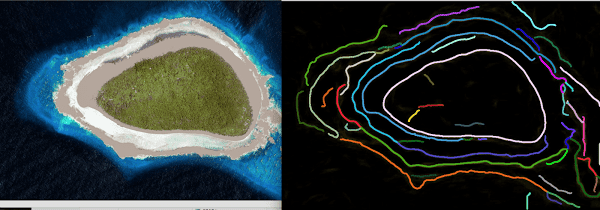

For drag, I wanted to create an endless line from the lines we found in the edge detection. The first step was to filter lines from the line detection algorithm and identify long lines that start on one edge and end on one of the three other edges.

Once I had a set of long lines (or to use a more accurate term, polylines , a collection of connected points) in order to connect them I converted these lines into a set of angle changes. Usually when you think of a polyline you imagine it as a set of points: point a is connected to point b which is connected to point c. Instead, you can treat the line as a set of angle changes: Move forward and rotate some amount, move forward and rotate some amount. A good way to visualize this is to think about wire bending machines , which take a piece of wire and as it's being extruded perform rotations. The shape of the drawing comes from turning.

If you consider the line as angle changes and not points, it becomes easier to combine lines into one larger line with less discontinuities - rather than stitching points you are essentially adding relative angle changes. In order to add a line, you take the current angle of the main line aimage0nd add to it the relative changes of the line you want to add.

As a side note, I've used this technique of converting a line into a set of angle changes for artistic exploitation - you can make drawings "uncurl" similar to how wire can curl and uncurl. Some examples: one , two , three

This angle calculation is what allows us to steer the line as you drag - we calculate how off the main angle is from where we want to be and we look for a picture that will help the most getting the line going in the right direction. It's all a matter of thinking relatively.

Finally, I just want to say that this was a really fun project to be involved with. It's exciting as an artist to be asked to use a data set as lovely as these images and I'm honored the Data Arts team reached out. I hope you have fun experimenting with it!

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2016-12-15 UTC.

“Land Lines” - Start with a line, let the planet complete the picture.

Url: lines.chromeexperiments.com, google data arts team project, in collaboration with artist zach lieberman..

"Land Lines" is a Chrome Experiment that leverages the wealth of visual data from satellite images in creative ways. Users can explore Google Earth's satellite imagery through gestures - "Draw" to find images that match your lines; "Drag" to create an infinite line of connected rivers, highways, and coastlines. By utilizing a combination of machine learning, optimized algorithms, and graphics card power, the experiment runs efficiently on your phone’s web browser, eliminating the need for backend servers.

“Draw” to find satellite images that match your every line

“Drag” to create an infinite line of connected rivers, highways and coastlines.

Background:

Building on our successful collaboration with the Google Earth team for the Earth View website and Times Square billboard , we continued to explore ways to leverage the rich, colorful data from Earth's images. Our aim was not to focus solely on geographical data but to create a playful and unexpected browsing experience using gesture input on mobile Chrome browsers. We published a technical case study detailing the project's creation and open-sourced the code on GitHub to inspire developers.

Design Process and My Role:

As the design lead of the project team, I initiated the project, managed the artist relationship, led a prototyping design sprint, and oversaw the design of the website and marketing materials.

Prototyping Interactions:

We partnered with media artist Zach Lieberman, renowned for his innovative experimental drawing and animation tools. The project commenced with a three-week design sprint during which we prototyped various browsing interactions with the data. As a team, we were consistently drawn to the dominant lines in the images—highways, rivers, edges of mountains, and plots of land. We eventually settled on two interactions: drawing and dragging. I was responsible for designing the UX to showcase these interactions.

Prototype: Matching a drawn line with the satellite image with a similar dominant line.

Prototype: Matching and creating an endless line based on the gesture input.

Prototype: Plotting a number of satellite images with the t-SNE technique.

UI/UX Design:

Early design explorations of the web UI

Final design

Motion sketch created in AfterEffects, visualizing how the final UX would look and feel like.

In the year following launch, "Land Lines" garnered over 18 million interactions. It was featured on the front page of Reddit , became #1 in the "Internet is Beautiful" category, and topped HackerNews . The project received widespread press coverage, with outlets like Wired referring to it as "mesmerizing" and Curbed calling it "cartographic magic" .

Webby Award Winner

Gold Pencil from OneShow

FWA Site of the Day

Awwwards Site of the Day , blog feature, and inclusion in their eBook, Brain Food

Lines | Joy Division Visualisation

March 2016 | By Silvio Paganini

Collection:

Built with:

Start with a line, let the planet complete the picture

Example output from the line detection processing. The dominant line is highlighted in red while secondary lines are highlighted in green.

An early example of gesture matching using vantage point trees, where the drawn input is on the right and the closest results on the left.

Another example of user gesture analysis, where the drawn input is on the right and the closest results on the left.

- google earth

- Machine Learning

Search This Blog

Of the week, land lines | chrome experiment of the week.

Post a Comment

- Hispanoamérica

- Work at ArchDaily

- Terms of Use

- Privacy Policy

- Cookie Policy

- Architecture News

Land Lines: Trace an Infinite Path Around the Planet Using Maps

- Written by AD Editorial Team

- Published on December 20, 2016

Land Lines , a new Chrome Experiment exploiting the satellite image data collated by Google Maps , allows anyone—cartographic aficionado or otherwise—to marvel at the contours of the world through gestures. Intelligently designed to detect dominant visual lines from a dataset of thousands of images, cut down from over 50,000 by using a combination of OpenCV Structured Forests and ImageJ’s Ridge Detection , users can simply "draw" or "drag" on a mobile browser or on a desktop to "create an infinite line of connected rivers, highways and coastlines."

Interestingly, by employing "a combination of machine learning, optimized algorithms, and graphics card power," the experiment is able to run efficiently on a web browser without a need for heavy backend servers. The experiment has been made by Zach Lieberman , Matt Felsen , and the Data Arts Team.

- Sustainability

世界上最受欢迎的建筑网站现已推出你的母语版本!

想浏览archdaily中国吗, you've started following your first account, did you know.

You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.

IMAGES

COMMENTS

“Draw” to find satellite images that match your every line; “Drag” to create an infinite line of connected rivers, highways and coastlines. Using a combination of machine learning, optimized algorithms, and graphics card power, the experiment is able to run efficiently on your phone’s web browser without a need for backend servers.

Machine learning and line detection algorithms were used to preprocess all images and identify the dominant lines. This enabled the analysis of brushstrokes and retrieval of the matching image efficiently without the need for a server.The project was made in collaboration by Zach Lieberman and the Data Arts Team.

Jan 4, 2017 · Satellite images provide a wealth of visual data from which we can visualize in interesting ways. Land Lines is an experiment that lets you explore Google Earth satellite imagery through gesture. “Draw” to find satellite images that match your every line; “Drag” to create an infinite line of connected rivers, highways and coastlines.

Dec 19, 2016 · Land Lines is an interesting Chrome experiment that uses Google Earth imagery. The experiment was made by Zach Lieberman, Matt Felsen, and the Data Arts Team. They have used the imagery from the ...

Dec 15, 2016 · Land Lines is an experiment that lets you explore Google Earth satellite images through gesture. Using a combination of machine learning, optimized algorithms,and graphics card power, the experiment is able to run efficiently on your phone's web browser without a need for backend servers. This is a look into our development process and the various approaches we tried leading us to the ...

"Land Lines" is a Chrome Experiment that leverages the wealth of visual data from satellite images in creative ways. Users can explore Google Earth's satellite imagery through gestures - "Draw" to find images that match your lines; "Drag" to create an infinite line of connected rivers, highways, and coastlines.

Since 2009, coders have created thousands of amazing experiments using Chrome, Android, AI, WebVR, AR and more. We're showcasing projects here, along with helpful tools and resources, to inspire others to create new experiments.

Dec 15, 2016 · Take a break this holiday season and paint with satellite images of the Earth through a new experiment called Land Lines. The project lets you explore Google Earth images in unexpected ways through gesture. Earth provides the palette; your fingers, the paintbrush. There are two ways to explore–drag or draw.

Apr 13, 2018 · Land Lines is a Chrome experiment made by Zach Lieberman, Matt Felsen, and the Google Data Arts Team. In "DRAW" mode, you can draw a squiggly line or shape and see an aerial view of somewhere on earth that contains a similar shape. Once a map appears, you are told the general location.

Dec 20, 2016 · Land Lines Chrome Experiment. Image Courtesy of Lines. Interestingly, by employing "a combination of machine learning, optimized algorithms, and graphics card power," the experiment is able to run ...